LidarSensor

Introduction

LidarSensor is the component that simulates the LiDAR (Light Detection and Ranging) sensor.

LiDAR works by emitting laser beams that bounce off objects in the environment, and then measuring the time it takes for the reflected beams to return, allowing the sensor to create a 3D map of the surroundings.

This data is used for object detection, localization, and mapping.

LiDAR in an autonomous vehicle can be used for many purposes. The ones mounted on the top of autonomous vehicles are primarily used

- to scan the environment for localization in space

- to detect and identify obstacles such as approaching vehicles, pedestrians or other objects in the driving path.

LiDARs placed on the left and right sides of the vehicle are mainly used to monitor the traffic lane and detect vehicles moving in adjacent lanes, enabling safe maneuvers such as lane changing or turning.

LidarSensor component is a part of RGLUnityPlugin that integrates the external RobotecGPULidar (RGL) library with Unity. RGL also allows to provide additional information about objects, more about it here.

Use RGL in your scene

If you want to use RGL in your scene, make sure the scene has an SceneManager component added and all objects meet the usage requirements.

RGL default scenes

If you would like to see how LidarSensor works using RGL or run some tests, we encourage you to familiarize yourself with the RGL test scenes section.

Supported LiDARs

The current scripts implementation allows you to configure the prefab for any mechanical LiDAR. You can read about how to do it here. MEMS-based LiDARs due to their different design are not yet fully supported.

Comparing with Unity Raycaster

By default, the Unity Raycaster (Physics.Raycast) does not hit in the backside of the triangles. In contrast, RGL prevents the triangles to be culled due to their orientation and rays hit them even if rays back-facing mesh triangles. Be aware of it when comparing point clouds. To achieve Unity Raycaster back-facing hits, the Physics.queriesHitBackfaces property must be enabled. On the image below the red point is captured by Unity Raycaster (default configuration) and the blue point is captured by RGL.

RGL distance measurement errors

According to tests conducted by the Robotec team, the distance measurement error of the RGL library in most cases is of the order of 32-bit machine epsilon and thus unnoticeable in Unity. The only case when distance measured by RGL has any noticeable error (i.e. greater than machine epsilon) is combination of extreme translation and rotation of the sensor. When sensor with its target are translated by 90 kilometers from world origin, and rotated very slightly, then relative distance from sensor to target was measured as 1,000257 meters comparing to 1 meter in reality. Those gives 0,0257% error.

Prefabs

Prefabs can be found under the following path:

Assets/AWSIM/Prefabs/Sensors/RobotecGPULidars/*

The table of available prefabs can be found below:

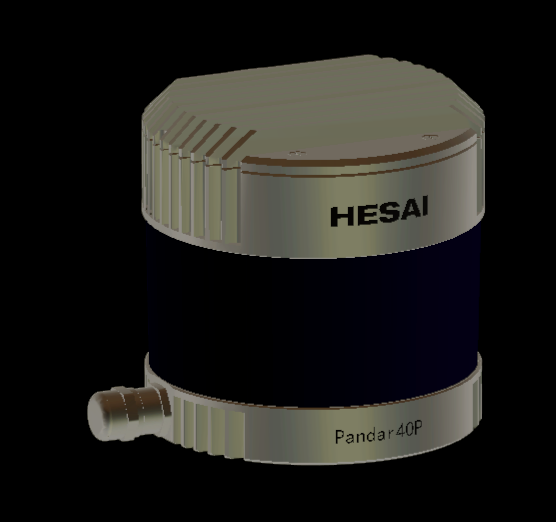

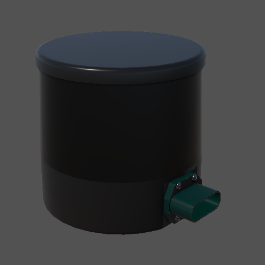

| LiDAR | Path | Appearance |

|---|---|---|

| HESAI Pandar40P | HesaiPandar40P.prefab |

|

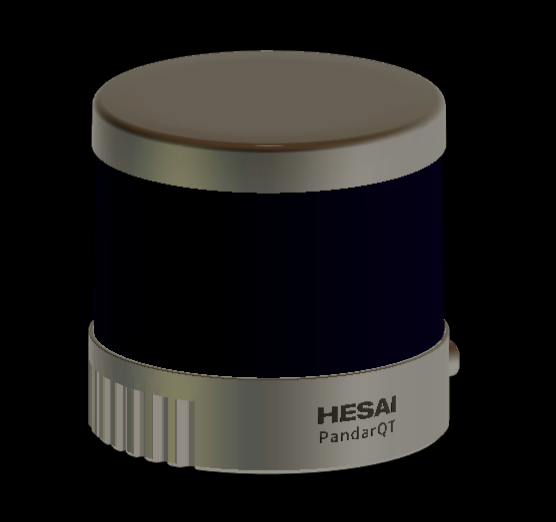

| HESAI PandarQT64 | HesaiPandarQT64.prefab |

|

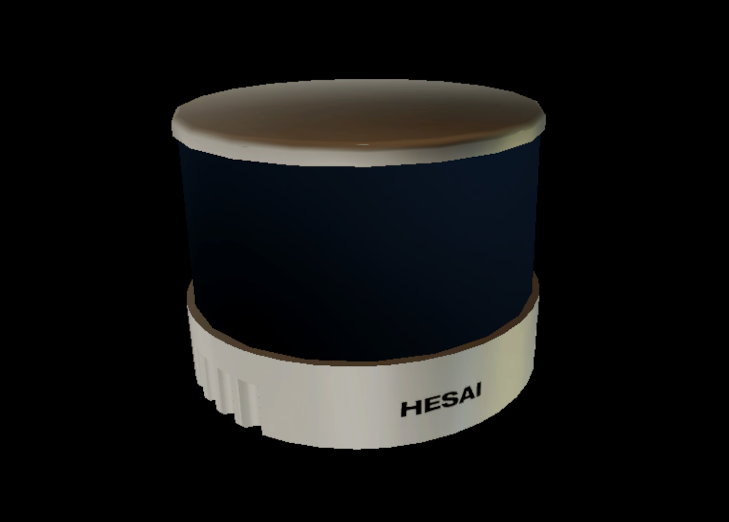

| HESAI PandarXT32 | HesaiPandarXT32.prefab |

|

| HESAI QT128C2X | HesaiQT128C2X.prefab |

|

| HESAI Pandar128E4X | HesaiPandar128E4X.prefab |

|

| HESAI AT128 E2X | HesaiAT128E2X.prefab |

|

| Ouster OS1-64 | OusterOS1-64.prefab |

|

| Velodyne VLP-16 | VelodyneVLP16.prefab |

|

| Velodyne VLC-32C | VelodyneVLP32C.prefab |

|

| Velodyne VLS-128-AP | VelodyneVLS128.prefab |

|

Link in the default Scene

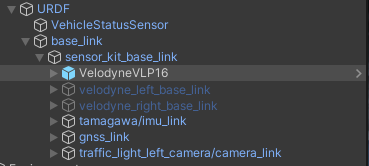

LidarSensor is configured in default vehicle EgoVehicle prefab.

It is added to URDF object as a child of sensor_kit_base_link.

LidarSensor placed in this way does not have its own frame, and the data is published relative to sensor_kit_base_link.

More details about the location of the sensors in the vehicle can be found here.

A detailed description of the URDF structure and sensors added to prefab Lexus RX450h 2015 is available in this section.

Additional LiDARs

For a LiDAR placed on the left side, right side or rear, an additional link should be defined.

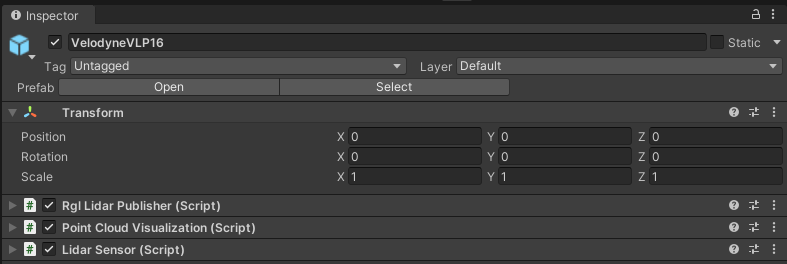

Components and Resources

The LiDAR sensor simulation functionality is split into three components:

- Lidar Sensor (script) - provides lidar configuration, creates RGL pipeline to simulate lidar, and performs native RGL raytrace calls,

- Rgl Lidar Publisher (script) - extends RGL pipeline with nodes to publish ROS2 messages.

- Point Cloud Visualization (script) - visualizes point cloud collected by sensor.

Moreover, the scripts use Resources to provide configuration for prefabs of supported lidar models:

- LaserModels - provides a list of supported models,

- LaserArrayLibrary - provides data related to laser array construction for supported models,

- LaserConfigurationLibrary - provides full configuration, with ranges and noise for supported models.

These are elements of the RGLUnityPlugin, you can read more here.

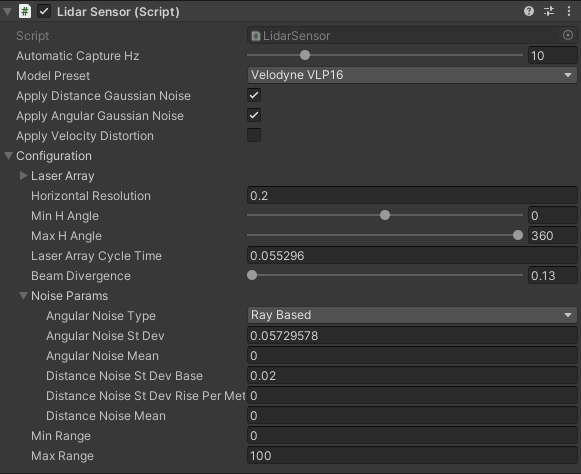

Lidar Sensor (script)

This is the main component that creates the RGL node pipeline for the LiDAR simulation.

The pipeline consists of:

- setting ray pattern,

- transforming rays to represent pose of the sensor on the scene,

- applying Gaussian noise,

- performing raytracing,

- removing non-hits from the result point cloud,

- transforming point cloud to sensor frame coordinate system.

Elements configurable from the editor level

Automatic Capture Hz- the rate of sensor processing (default:10Hz)Model Preset- allows selecting one of the built-in LiDAR models (default:RangeMeter)Return Mode- allows selecting LiDAR return mode (default:Single Return First). Both single and multi return modes may be selected, including various return types. Return types may be base on distance from LiDAR (e.g. first or last) or on instance intensity (strongest and second strongest). Note that dual return modes are reasonable only when beam divergence simulation is enabled (see parameter below). If beam divergence simulation is disabled and one of dual return modes is selected, then the same hit point will be returned for each return type (two exactly same hit points for each LiDAR ray). Available return modes are:Single Return FirstSingle Return SecondSingle Return LastSingle Return StrongestDual Return Last StrongestDual Return First LastDual Return First StrongestDual Return Strongest Second StrongestDual Return First Second

Apply Distance Gaussian Noise- enable/disable distance Gaussian noise (default:true)Apply Angular Gaussian Noise- enable/disable angular Gaussian noise (default:true)Apply Velocity Distortion- enable/disable velocity distortion (default:false)Simulate Beam Divergence- enable/disable beam divergence simulation (default:false). Setting this tofalseeffectively cause RGL to ignore horizontal and vertical beam divergence values (as they would be set to 0). Note that simulating beam divergence requires significantly more processing power - if you observe any issues with the framerate, it is suggested to disable beam divergence simulation.-

Configuration:

Laser Array- geometry description of lidar's array of lasers, should be prepared on the basis of the manual for a given model of LiDAR (default: loaded fromLaserArrayLibrary)Horizontal Resolution- the horiontal resolution of laser array firingsMin H Angle- minimum horizontal angle, left (default:0)Max H Angle- maximum horizontal angle, right (default:0)Laser Array Cycle Time- time between two consecutive firings of the whole laser array in milliseconds (default:0); used for velocity distortion feature.Horizontal Beam Divergence- represents horizontal deviation of photons from a single beam emitted by a LiDAR sensor (in degrees);Vertical Beam Divergence- represents vertical deviation of photons from a single beam emitted by a LiDAR sensor (in degrees);- Noise Params:

Angular Noise Type- angular noise type

(default:Ray Based)Angular Noise St Dev- angular noise standard deviation in degree

(default:0.05729578)Angular Noise Mean- angular noise mean in degrees

(default:0)Distance Noise St Dev Base- distance noise standard deviation base in meters

(default:0.02)Distance Noise Rise Per Meter- distance noise standard deviation rise per meter

(default:0)Distance Noise Mean- distance noise mean in meters

(default:0)

-

Output Restriction Params:

Apply Restriction- enable/disable fault injection (default:false)Rectangular Restriction Masks- list of rectangular masks used for output restriction; each mask is represented via ranges of angles in horizontal and vertical dimensionsEnable Periodic Restriction- change mode from static to periodic (default:false)Restriction Period- time of whole period in secondsRestriction Duty Rate- rate of time with masked outputEnable Restriction Randomizer- enable/disable random periodic mode (default:false)Min Random Period- lower bound of time period in seconds used in random modeMax Random Period- upper bound of time period in seconds used in random mode

-

Additional options (available for some Lidar Model Preset)

Min Range- minimum range of the sensor (if not avaiable, the range is different for each laser inLaser Array)Max Range- maximum range of the sensor (if not avaiable, the range is different for each laser inLaser Array)High Resolution Mode Enabled- whether to activate high resolution mode (available forHesai Pandar 128E4XLiDAR model)

Output Data

LidarSensor provides public methods to extend this pipeline with additional RGL nodes.

In this way, other components can request point cloud processing operations and receive data in the desired format.

Example of how to get XYZ point cloud data:

- To obtain point cloud data from another component you have to create a new

RGLNodeSequencewith RGL node to yield XYZ field and connect it toLidarSensor:rglOutSubgraph = new RGLNodeSequence().AddNodePointsYield("OUT_XYZ", RGLField.XYZ_F32); lidarSensor = GetComponent<LidarSensor>(); lidarSensor.ConnectToWorldFrame(rglOutSubgraph); // you can also connect to Lidar frame using ConnectToLidarFrame // You can add a callback to receive a notification when new data is ready lidarSensor.onNewData += HandleLidarDataMethod; - To get data from

RGLNodeSequencecallGetResultData:Vector3[] xyz = new Vector3[0]; rglOutSubgraph.GetResultData<Vector3>(ref xyz);

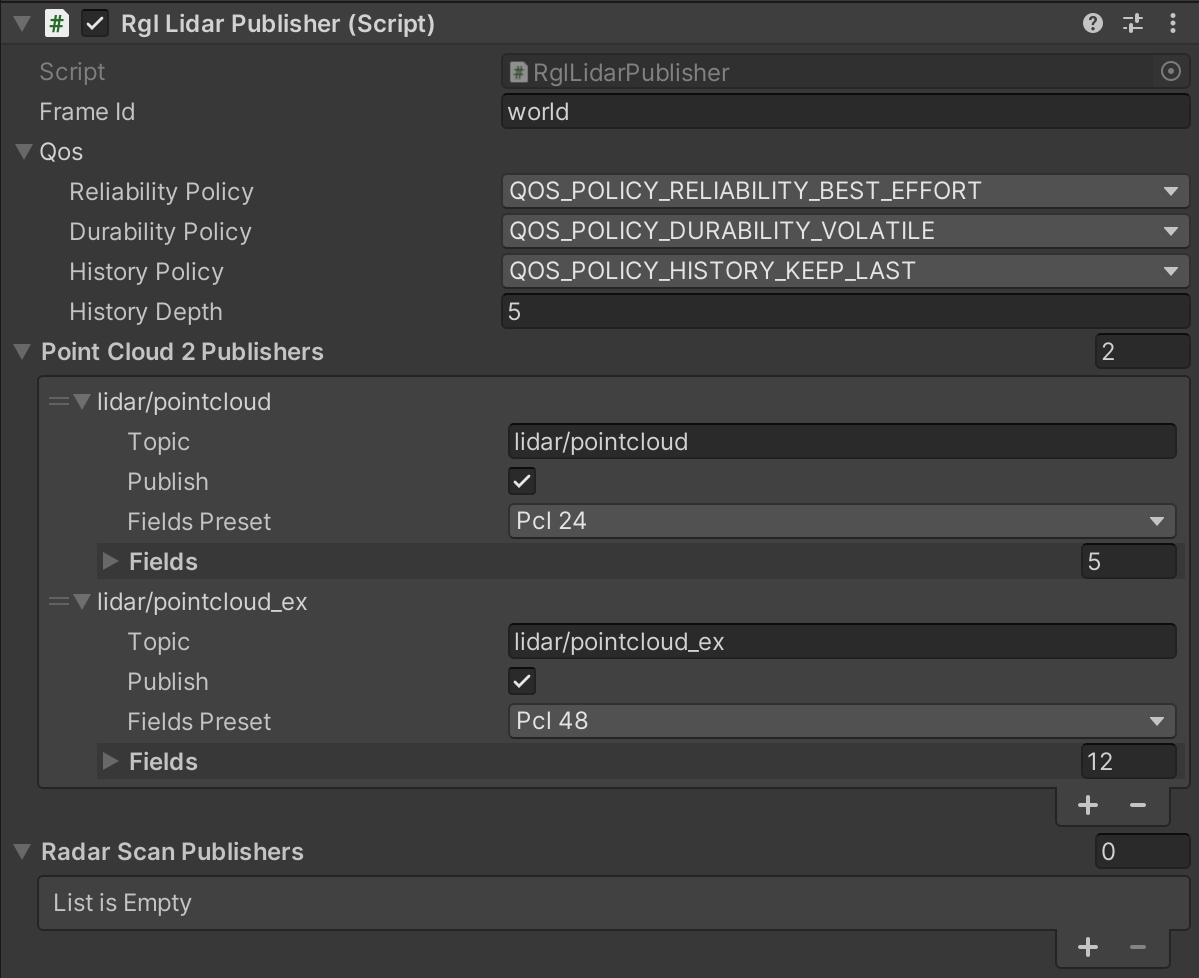

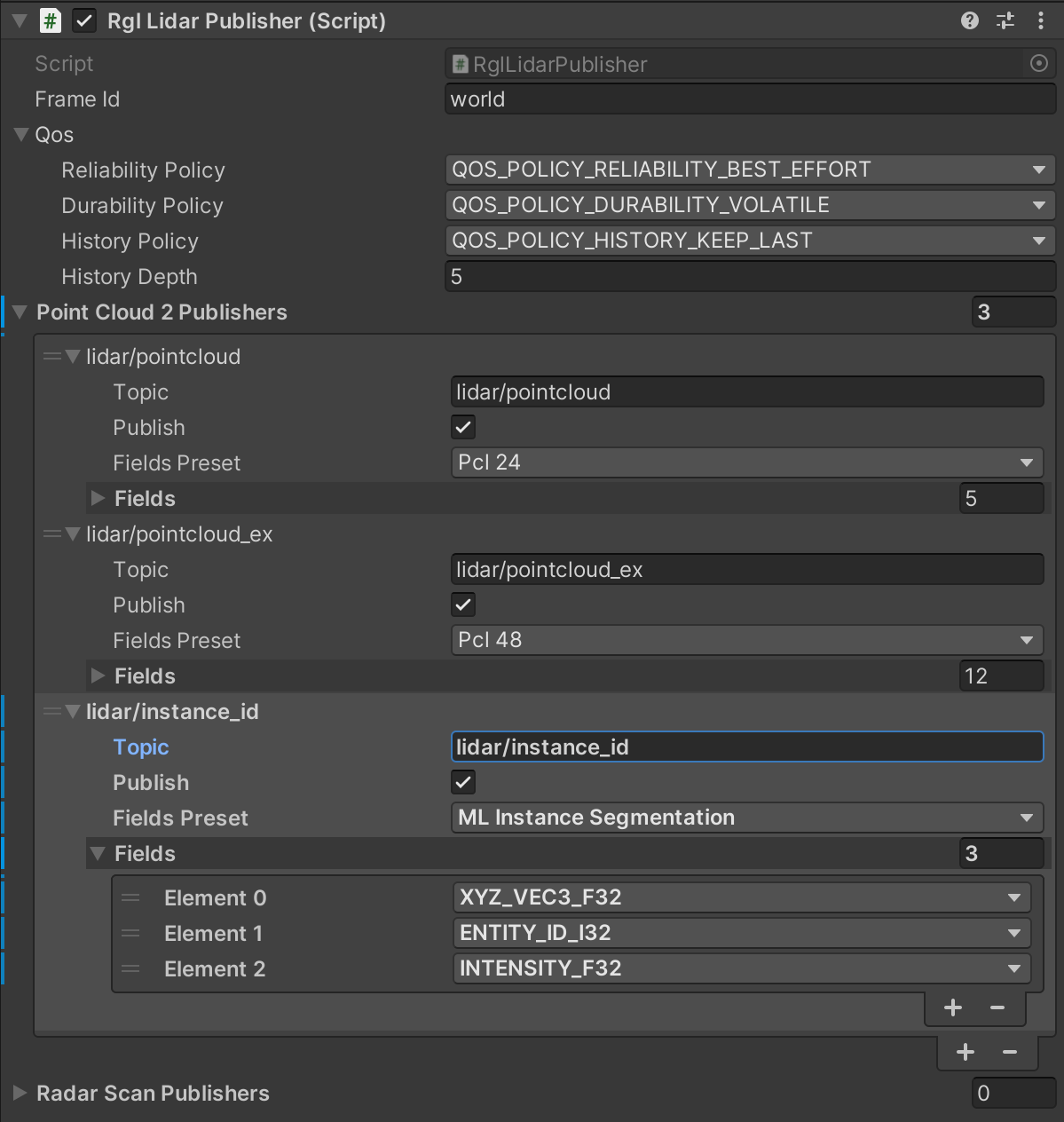

Rgl Lidar Publisher (script)

RglLidarPublisher extends the main RGL pipeline created in LidarSensor with RGL nodes that produce point clouds in specific format and publish them to the ROS2 topic.

Thanks to the ROS2 integration with RGL, point clouds can be published directly from the native library.

RGL creates ROS2 node named /RobotecGPULidar with publishers generated by RGL nodes.

Currently, RglLidarPublisher implements ROS2 publishers for two message types:

PointCloud2 message allows publishing point clouds with different points attributes (described by fields parameter). In order to easily select different frequently used field sets RglLidarPublisher has several field presets defined:

| Preset | Description | Fields |

|---|---|---|

| Pcl 24 | 24-byte point cloud format used by Autoware | XYZ_VEC3_F32, PADDING_32, INTENSITY_F32, RING_ID_U16, PADDING_16 |

| PointXYZIRCAEDT | PointXYZIRCAEDT format used by Autoware | XYZ_VEC3_F32, INTENSITY_U8, RETURN_TYPE_U8, RING_ID_U16, AZIMUTH_F32, ELEVATION_F32, DISTANCE_F32, TIME_STAMP_U32 |

| Pcl 48 | 48-byte extended version point cloud format used by Autoware (legacy) | XYZ_VEC3_F32, PADDING_32, INTENSITY_F32, RING_ID_U16, PADDING_16, AZIMUTH_F32, DISTANCE_F32, RETURN_TYPE_U8, PADDING_8, PADDING_16, PADDING_32, TIME_STAMP_F64 |

| ML Instance Segmentation | Machine learning format for instance/semantic segmentation tasks | XYZ_VEC3_F32, ENTITY_ID_I32, INTENSITY_F32 |

| Radar Smart Micro | Format used in Radar Smart Micro | XYZ_VEC3_F32, RADIAL_SPEED_F32, POWER_F32, RCS_F32, NOISE_F32, SNR_F32 |

| Custom | Empty format that allows the user to define its fieldsets |

PointXYZIRCAEDT format

For a better understanding of the PointXYZIRCAEDT format, we encourage you to familiarize yourself with the point cloud pre-processing process in Autoware, which is described here.

Elements configurable from the editor level

Frame ID- frame in which data are published, used inHeader(default:"world")Qos- Quality of service profile used in the publicationReliability Policy- Reliability policy (default:Best effort)Durability Policy- Durability policy (default:Volatile)History Policy- History policy (default:Keep last)History Depth- History depth. If history policy isKeep all, depth is ignored. (default:5)

Point Cloud 2 Publishers- List of sensor_msgs/PointCloud2 message publishersTopic- Topic name to publish onPublish- If false, publishing will be stoppedFields Preset- allows selecting one of the pre-defined fieldsets (chooseCustomto define your own)Fields- List of fields to be present in the message

Radar Scan Publishers- List of radar_msgs/RadarScan message publishersTopic- Topic name to publish onPublish- If false, publishing will be stopped

Elements configurable in simulation runtime

Once the simulation starts, only the Publish flag is handled. All of the publishers are initialized on the simulation startup and updates of their parameters are not supported in runtime. Any changes to the publishing configuration are ignored.

Default Publishing Topics

- Frequency:

10Hz - QoS:

Best effort,Volatile,Keep last/5

| Category | Topic | Message type | frame_id |

|---|---|---|---|

| PointCloud 24-byte format | /lidar/pointcloud |

sensor_msgs/PointCloud2 |

world |

| PointXYZIRCAEDT format | /lidar/pointcloud_ex |

sensor_msgs/PointCloud2 |

world |

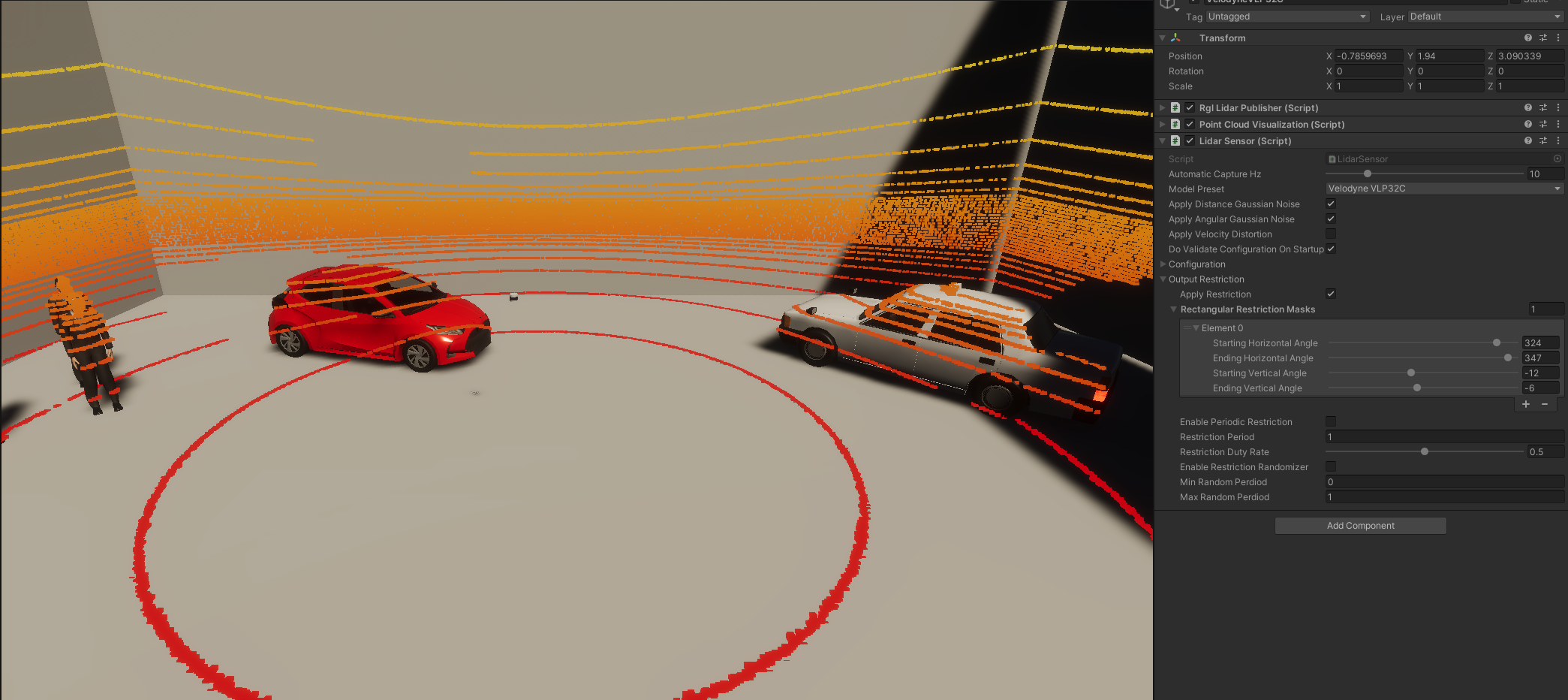

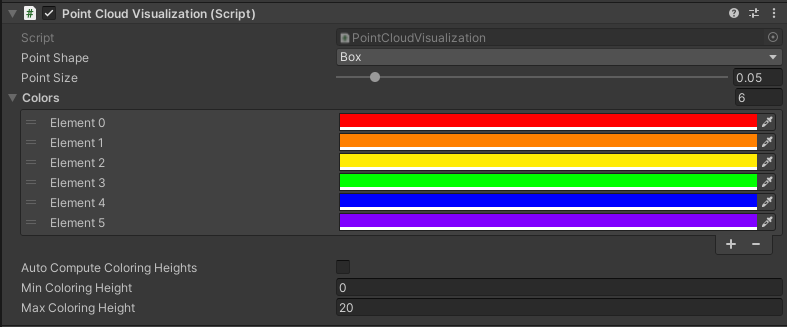

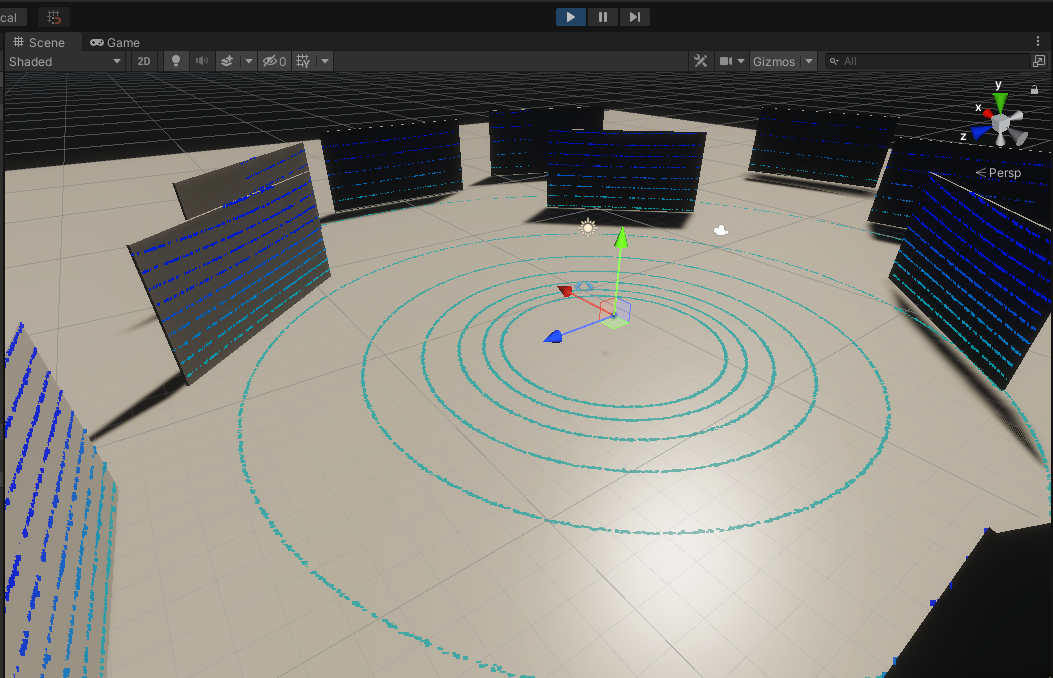

Point Cloud Visualization (script)

A component visualizing a point cloud obtained from RGL in the form of a Vector3 list as colored points in the Unity scene.

Based on the defined color table, it colors the points depending on the height at which they are located.

The obtained points are displayed as the vertices of mesh, and their coloring is possible thanks to the use of PointCloudMaterial material which can be found in the following path:

Assets/RGLUnityPlugin/Resources/PointCloudMaterial.mat

Point Cloud Visualization preview:

Elements configurable from the editor level

Point Shape- the shape of the displayed points (default:Box)Point Size- the size of the displayed points (default:0.05)Colors- color list used depending on height

(default:6colors:red, orange, yellow, green, blue, violet)Auto Compute Coloring Heights- automatic calculation of heights limits for a list of colors (default:false)Min Coloring Height- minimum height value from which color matching is performed, below this value all points have the first color from the list (default:0)Max Coloring Height- maximum height value from which color matching is performed, above this value all points have the last color from the list (default:20)

Read material information

To ensure the publication of the information described in this section, GameObjects must be adjusted accordingly. This tutorial describes how to do it.

Intensity Texture

RGL Unity Plugin allows assigning an Intensity Texture to the GameObjects to produce a point cloud containing information about the lidar ray intensity of hit. It can be used to distinguish different levels of an object's reflectivity.

Output data

Point cloud containing intensity is published on the ROS2 topic via RglLidarPublisher component. The intensity value is stored in the intensity field of the sensor_msgs/PointCloud2 message.

Instance segmentation

RGL Unity Plugin allows assigning an ID to GameObjects to produce a point cloud containing information about hit objects. It can be used for instance/semantic segmentation tasks. This tutorial describes how to do it.

LidarInstanceSegmentationDemo

If you would like to see how LidarInstanceSegmentationDemo works using RGL or run some tests, we encourage you to familiarize yourself with this section.

Output data

Point cloud containing hit objects IDs is published on the ROS2 topic via RglLidarPublisher component. The publisher for such point cloud format is not added by default. Add a new PointCloud2 publisher with ML Instance Segmentation fields preset or create your format by selecting Custom preset (remember to add ENTITY_ID_I32 field which holds objects IDs).

Dictionary mapping

The resulting simulation data contains only the id of objects without their human-readable names. To facilitate the interpretation of such data, a function has been implemented to save a file with a dictionary mapping instance ID to GameObject names. It writes pairs of values in the yaml format:

- The name of the GameObject

- Category ID of

SemanticCategorycomponent

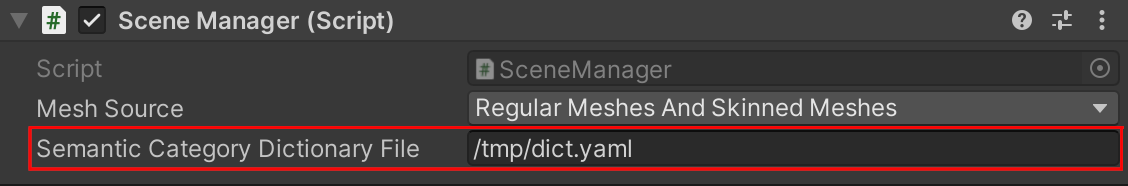

To enable saving dictionary mapping set output file path to the Semantic Category Dictionary File property in the Scene Manager component:

The dictionary mapping file will be saved at the end of the simulation.

LiDAR output restriction

Describes LiDAR faults modeled as a set of rectangular masks obstructing part of the rays.

Example set of parameters for output restriction resulting in one rectangular mask obstructing rays: